NeuroPNT: Brain-inspired General Intelligent Positioning, Navigation, Timing System for Neurorobots

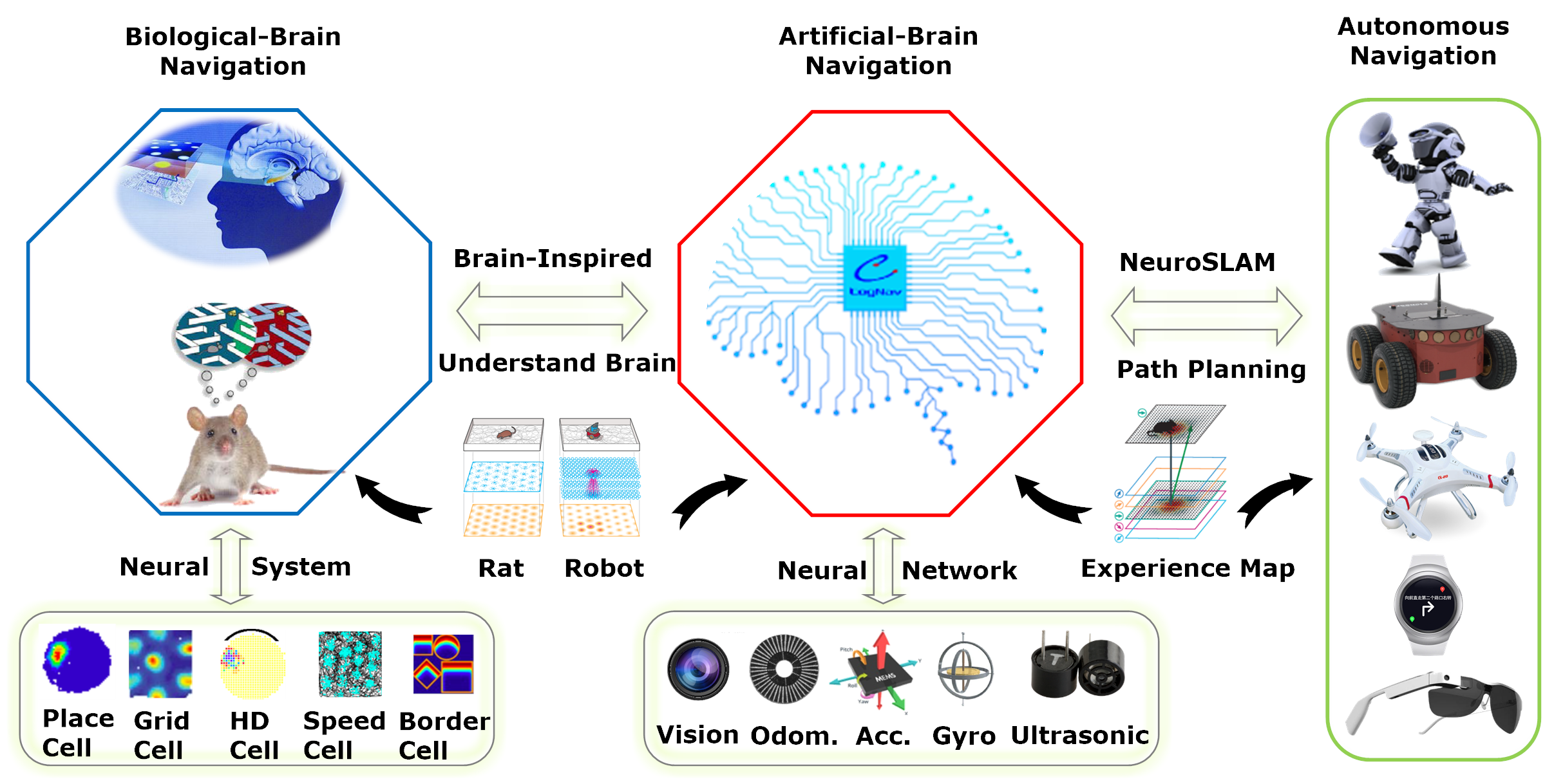

The NeuroPNT Project aims to model the neural mechanisms in the brain underlying tasks like positioning, navigation, timing, spatial learning and memory to develop new brain-inspired general intelligent positioning, navigation, timing technologies for brain-inspired general intelligent robots.

This project is partly funded by:

National Natural Science Foundation of China. Brain Inspired 3D Navigation with Neuromorphic Computing Chips. 2023.01-2025.12.

Natural Science Foundation of Jiangsu Province. Research on Brain-Inspired Navigation Theory and Method for Unmaned System in Complex Environments. 2024.10-2027.10

Natural Science Foundation of Beijing. Research on High Realtime Brain-Inspired Localization for Unmaned System. 2026.01-2027.12

Participants:Fangwen Yu (Leader), etc.

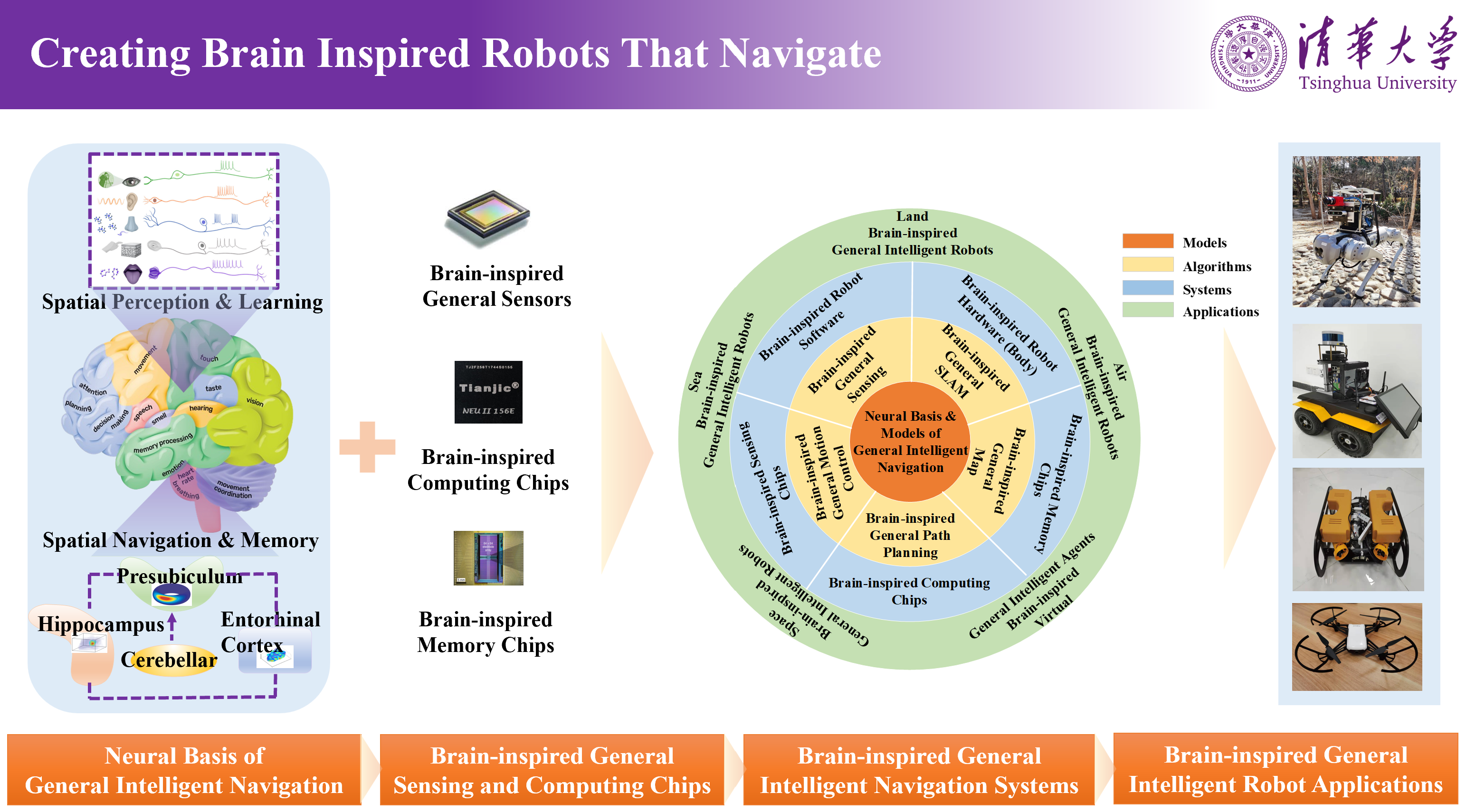

NeuroRobot: Brain-inspired General Intelligence Robot with Neuromorphic Intelligence

The NeuroRobot Project aims to create brain-inspired general intelligent robots with neuromorphic intelligence. The project is investigating on neuromorphic robot research platform and exploring neuromorphic robot applications.

Participants:Fangwen Yu (Leader), etc.

NeuroROS: Brain-inspired Robot Operating System

The NeuroROS project aims to develop an open source brain-inspired robot operating system, NeuroROS, which integrates neuromorphic sensors, neuromorphic computing chips, neuromrophic memory chips, neuromorphic algorithms, neuromorphic communication, neuromorphic applications and neuromorphic tools for next generation brain-inspired intelligent robotics.

Participants:Fangwen Yu (Leader), etc.

NeuroSLAM: Brain-inspired SLAM System for 3D Environments

The NeuroSLAM Project aims to model the neural mechanisms in the brain underlying tasks like 3D navigation and 3D spatial cognition to develop new neuromorphic 3D SLAM and navigation technologies for space, air, land, sea-based neuromorphic robots and vehicles.

This project is partly funded by:

China Postdoctoral Science Foundation. NeuroMap: Brain-Inspired Encoding and Memory of 3D Spatial Experience Map with Neuromorphic Chips. 2021.11-2023.07.

National Natural Science Foundation of China. Brain Inspired 3D Navigation with Neuromorphic Computing Chips. 2023.01-2025.12.

More info on www.NeuroSLAM.net

Participants:Fangwen Yu (Leader), Prof. Michael Milford, Prof. Jianga Shang, etc.

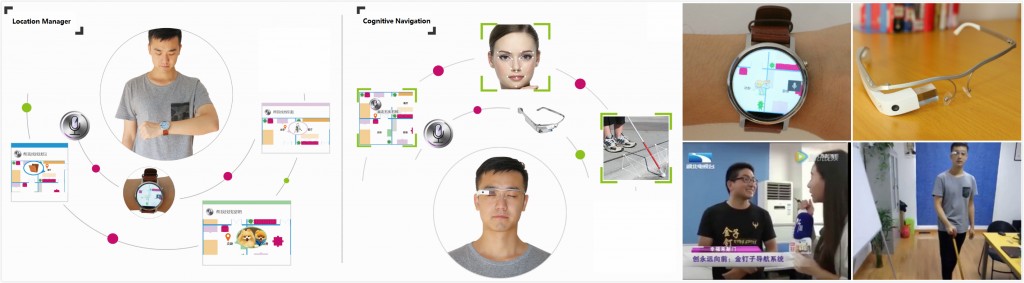

WearNav: Wearable Indoor Cognitive Navigation System

2015-12-2017.12

The project was supported by Fundamental Research Founds for National University of CUG (No. 1610491T08). It aims to build an indoor cognitive navigation system based on smart wearable devices, e.g. smart glass, smart watch. The WearNav system was developed based on an intelligent hybrid indoor positioning approach fusing Wi-Fi, BLE, PDR, Vision, spatial model-aided enhanced localization. In addition, a cognitive navigation model was developed which utilizes indoor semantic landmarks to improve the navigation performance and reduce user’s cognitive load.

Participants:Fangwen Yu (Leader), Yifan Zhang, Zhiyong Zhou, Xinyi Tang, Wen Chen, Yongfeng Wu, Jianga Shang, etc.

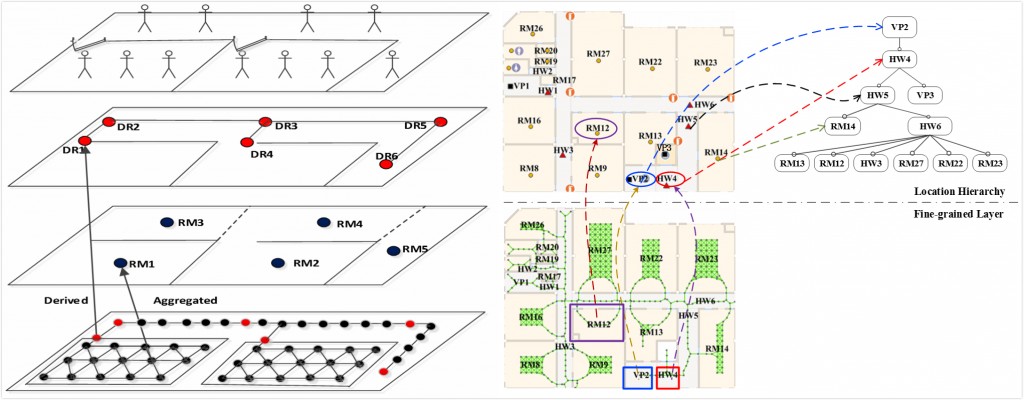

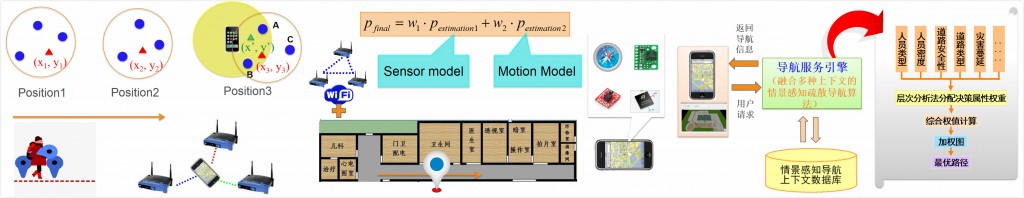

SeLoMo: Multi-Layered Indoor Semantic Location Model

2013.01 – 2016.12

The project was supported by the National Natural Science Foundation of China (No. 41271440). It aims to build a multi-layered indoor semantic location model to support enhanced indoor positioning and intelligent location-based services, like “Position queries, Nearest neighbour queries, Range queries, Navigation and Visualization” for context-aware applications in ubiquitous computing environments especially indoor spaces. In particularly, the model can represent spatial features for indoor positioning, like floor map, sensory landmarks, spatial constraints, spatial topology, signal maps, etc., which can be used for enhanced spatial model-aided indoor positioning.

Participants:Jianga Shang, Fangwen Yu, Zhiyong Zhou, Xinyi Tang, Jinjin Yan, Xin Wang, Chao Wang, Jie Ma, etc.

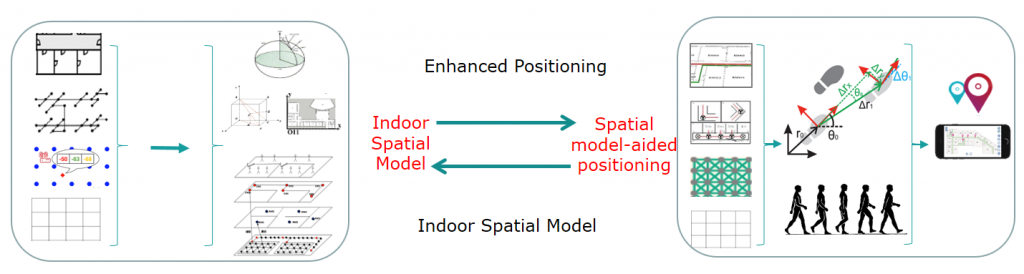

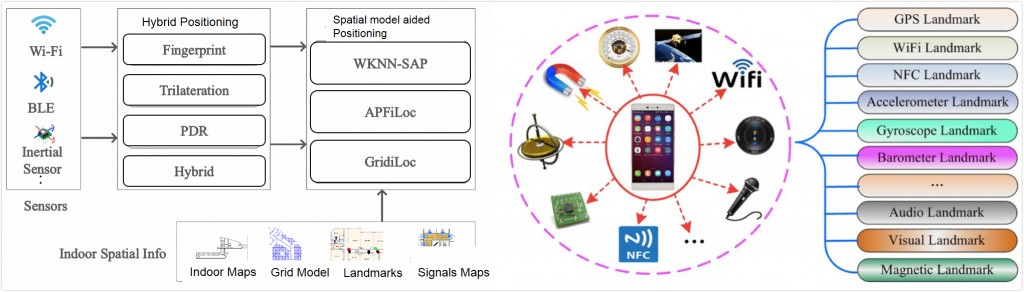

SpaLoc: Spatial Model-aided Indoor Localization

2016.05 – 2017.09

The project was supported by National Key Research and Development Program of China (No. 2016YFB0502200). Challenge in indoor positioning is how to keep balance among low cost, high accuracy, and ubiquitous. It aims to utilized spatial information to improve indoor positioning accuracy and robust. The spatial information includes geometry, topological graph, grid model, sensory landmarks, signals characteristics map, etc., which can be used to constraint motion and improve positioning accuracy with low cost.

Participants:Jianga Shang, Wen Cheng, Yongfeng Wu, Pan Chen, Fangwen Yu, Xuke Hu, Ao Guo, Fuqiang Gu, etc.

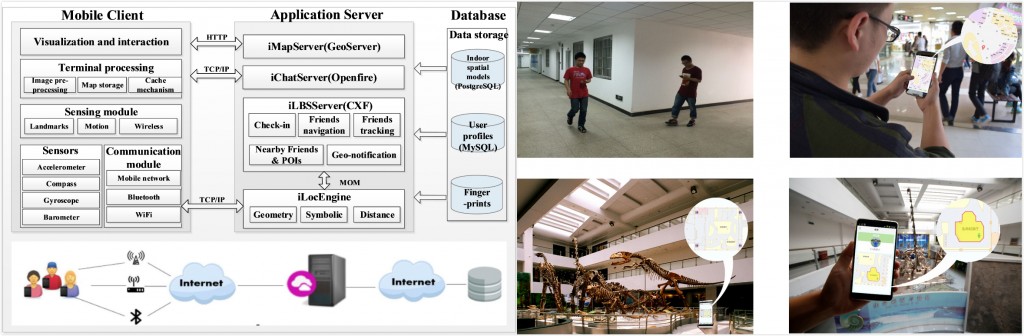

UbiEyes: Universal Indoor Real-time Positioning System

2012.01 –2014.12

The project was supported by Fundamental Research Founds for National University (No. CUGL090247). It aims to develop a universal indoor real-time positioning system. The UbiEyes system is an independent software platform used to build and support real-time positioning or location-aware applications written in Java. The system includes several core service components, such as Localization Engine (uLocEngine), LBS Server (uLBSServer), Context Data Engine (CDE) and Message-Oriented Middleware (MOM), as well as several application software like location monitoring software (uLocView), mobile Location-based application software (uLocMobile) and system management software (uManager). The system supports different localization technologies including Wi-Fi, BLE, PDR, nanoLOC, ZigBee, GPS, etc., and uses a variety of strategies to achieve enchanced positioning accuracy. It also combines the indoor semantic location model to provide basic indoor location-based services.

Participants:Jianga Shang, Fangwen Yu, Fuqiang Gu, Xuke Hu, Ao Guo, Bin Ge, etc.

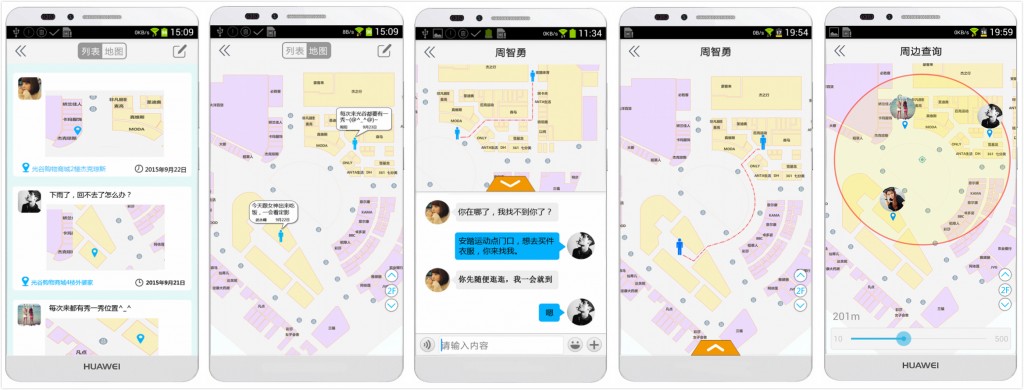

iSoNe: Indoor Location-based Mobile Social Network System

2013.12-2015.11

The project was supported by Fundamental Research Founds for National University (No. 1310491B07). It aims to build a mobile social network system based on indoor real-time positioning and location-based query technologies. The iSoNe system includes several core service components, such as Localization Engine (iLocEngine), LBS Server (iLBSServer), Chat Sever (iChatServer), Database and Message-Oriented Middleware (MOM), as well as mobile application software based on Andriod (iSoNe App). The system supports some core functions including peer-friend continuous navigation, nearby friend continuous query, fine-grained check-in, friend tracking, fine-grained geo-notification, etc. The system was tested in Optic Valley Mall, Yifu Museum of CUG, etc.

Participants:Zhiyong Zhou, Xuke Hu, Xinyi Tang, Rui Wang, Yang Zhou, Yue Zhuo, Fangwen Yu, Jianga Shang, etc.

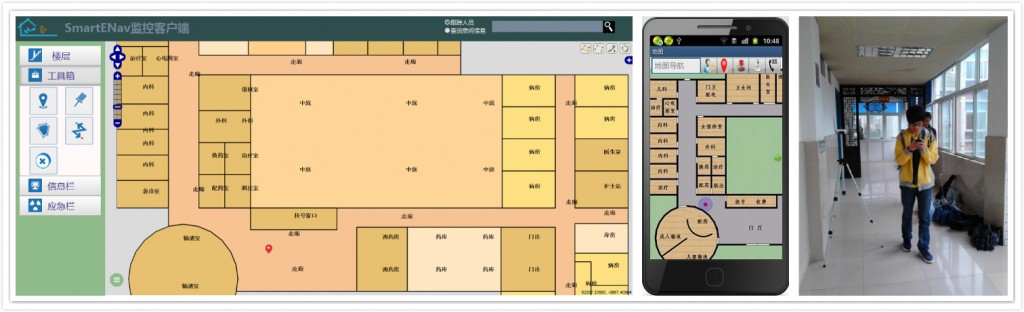

SmartENav: Context Aware Smart Indoor Navigation System for Emergency

2011.12-2013.12

The project was supported by Fundamental Research Founds for National University (No. 1310491B07). It aims to build a smart indoor navigation system for emergency based on context-aware and real-time positioning. It support a hybrid indoor positioning fusing Wi-Fi, BLE, PDR based on smart phone. The core functions include emergency exit navigation, rescue attendant tracking, security monitor, etc. It was tested in the school hospital of CUG.

Participants:Fangwen Yu (Leader), Jinjin Yan, Zhiyong Zhou, Xiao Zhang, Xiaonan Wang, Xuke Hu, Donghe Kang, Yibo Ge, Jianga Shang etc.